Take-home points

|

|

Bios Dr. Rajesh is an ophthalmology resident at Oregon Health & Science University. Dr. Lee is professor of ophthalmology and the C. Dan and Irene Hunter Endowed Professor at the University of Washington. DISCLOSURES: Dr. Anand Rajesh has no financial disclosures. Dr. Aaron Lee: U.S. Food and Drug Administration, Amazon, Carl Zeiss Meditec, iCareWorld, Meta, Microsoft, Novartis, Nvidia, Regeneron, Santen Pharmaceutical, Topcon, Alcon, Boehringer Ingelheim, Genentech/Roche, Gyroscope, Janssen, Johnson & Johnson, Verana Health and Microsoft. |

Artificial intelligence has arrived, checked in and is waiting in the lobby to be seen by an ophthalmologist. AI in ophthalmology has been around since 2018, when the U.S. Food and Drug Administration cleared the first AI algorithm for autonomous diabetic retinopathy screening. Currently, there are three algorithms available in the United States, with many more available in the European Union, the U.K., China, India and other countries. In this article, we’ll discuss these technologies and the current payment and regulatory barriers for their development, as well as the future challenges that AI faces in clinical adoption.

AI Framework

Broadly speaking, AI is when a computer analyzes data to learn and perform a task. Machine learning is a subset of AI, and deep learning is a subset of machine learning that uses a neural network architecture. All currently FDA-approved AI algorithms for DR screening use deep learning models.

A model is a mathematical function to recognize patterns in data and output predictions. Models can be trained to make predictions by inputting data that’s been labeled. For example, in a diabetic retinopathy screening model, a team of engineers will create a model composed of mathematical functions. Next, the model will iteratively be fed examples of fundus photographs of varying degrees of diabetic retinopathy that are labeled to indicate disease severity. The model will predict the diabetic retinopathy severity from fundus photos and it’ll iteratively improve on its performance of discerning the disease severity through a training process.

Finally, after the model’s been trained, it must be validated on diverse datasets with varying geographic, ethnic, disease and other characteristics to ensure that the model generalizes across a broader population. Engineers run tests to make sure the sensitivity, specificity and other metrics are preserved at a specific standard across those datasets. It’s important to remember that even if the model performs well on one dataset, it doesn’t mean it’ll perform equally as well on a different, diverse dataset.

Approved devices

In the United States there are three FDA-cleared AI devices that can autonomously screen for diabetic retinopathy: Digital Diagnostics’ IDx DR, EyeNuk’s EyeArt and AEYE Health. There are likely more algorithms under development to tackle autonomous DR screening.

Of these three algorithms, the cameras that are approved for their use by the FDA are slightly different. IDx-DR uses the Topcon NW400.1 EyeArt uses the Canon CR-2 AF, Canon CR-2 Plus AF and Topcon NW400 cameras.2 AEye is compatible with the Topcon NW400 and a portable fundus camera by Optomed.3 There are slight variations with regard to the number of photos required per eye, with some requiring macula- and disc-centered photos and some just requiring macula-centered images. All of these algorithms work with undilated eyes. They’re all able to autonomously analyze fundus photographs from clinics and determine whether the patient has more-than-mild diabetic retinopathy and requires an in-person examination with an ophthalmologist.

Who is using it and where?

Although these AI devices are FDA-approved, that doesn’t mean that they’re universally used. The Center for Medicare & Medicaid Services publishes annual reports on insurance claims by beneficiaries which can be used to evaluate utilization of specific medical procedures. Although the first algorithm was approved in 2018, there was no billing code for the reimbursement from insurance at that time. In 2021, the Current Procedural Terminology (CPT) code 92229 (Imaging of Retina for Detection or Monitoring of Disease; Point-of-care Autonomous Analysis and Report) was approved for use, allowing providers to make claims for reimbursement from Medicare/Medicaid. A recent article that examined a database comprising roughly 40 percent of all U.S. CMS claims found that there’s been an increase in the number of claims for CPT code 92229 from 2021 to 2023. From this database, there was a total of 15,097 claims over the past years, with an increase in the number of claims each year. Most of these claims were from ZIP codes in metropolitan areas with an academic medical center. The three cities with the most number of claims were Philadelphia, Dayton, Ohio; and New Orleans.4 Because this dataset was a subset of all CMS claims, it may underestimate the total number of claims for AI DR screening but the exact number is difficult to estimate. Idx-DR claims to be used in over 1,000 clinics in the United States but this was unable to be verified by the authors.

|

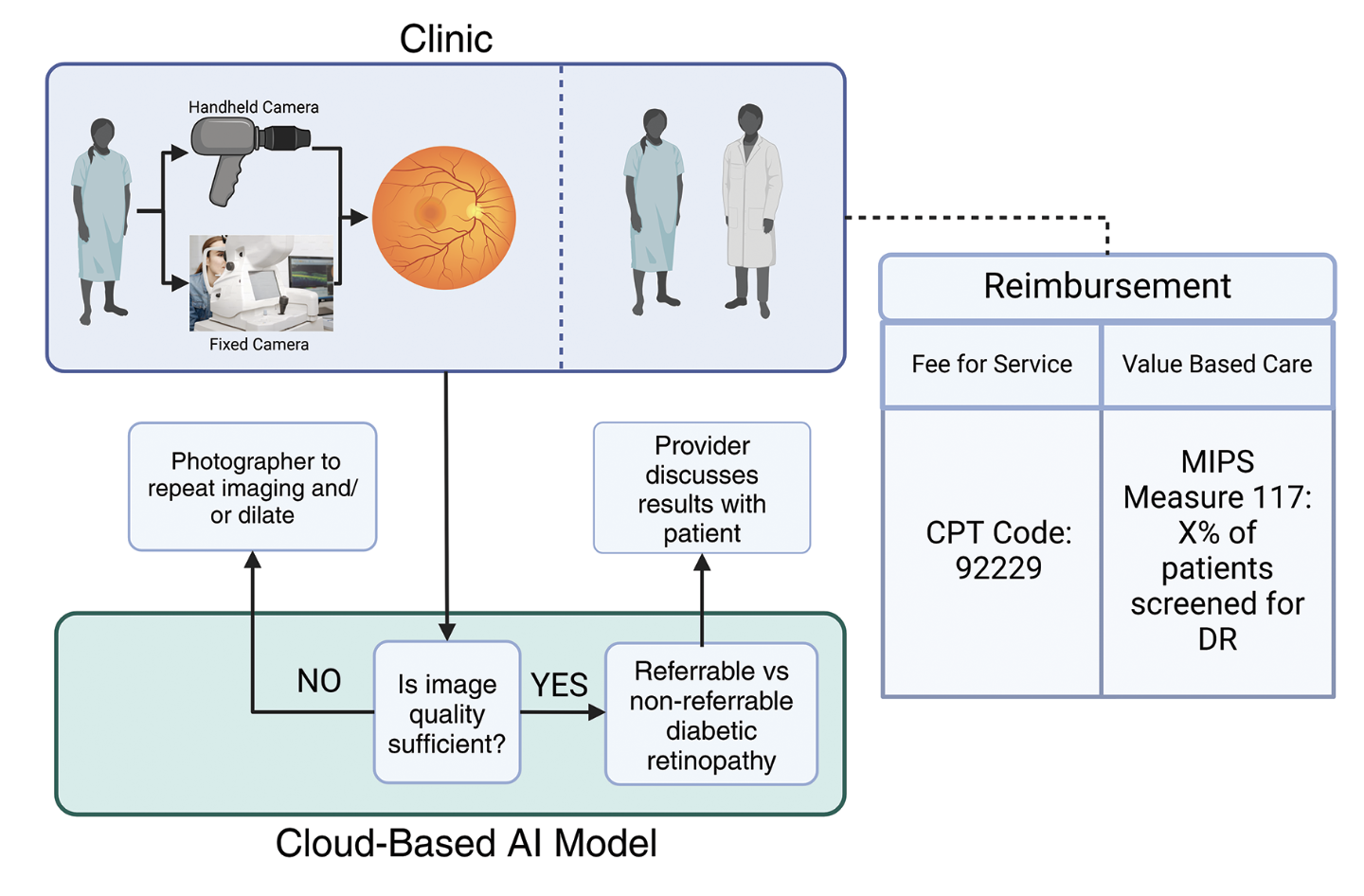

| Figure 1. Schematic of how an AI model will be deployed in a clinic with a patient. |

Regulatory issues

Although these algorithms have been shown to work in scientific papers, there’s a large gap to get them approved by regulatory bodies. The first FDA-approved device for AI screening was IDx-DR and it had the following performance for detecting referable diabetic retinopathy:

• Sensitivity: 87.4 percent (95% CI, 81.9 percent to 92.9 percent);

• Specificity: 89.5 percent (95% CI, 86.9 percent to 93.1 percent);

• Imageability: 96 percent;

• Positive Predictive Value (PPV): 73 percent; and

• Negative Predictive Value (NPV ): 96 percent.5,6

The FDA requires all subsequent algorithms to show equivalent performance to IDx-DR in order for them to be approved. Additionally, this must be demonstrated in a prospective study with a diverse cohort. Once an algorithm is cleared, the device manufacturers can’t change any of the model parameters or update the model until it’s been cleared again by the FDA.

Another regulatory area that’s still developing is the medicolegal responsibility for malpractice from the autonomous AI system. Currently, there’s limited legal precedent because of the small number of personal injury claims due to medical AI malpractice that have led to judicial decisions. The American Medical Association7 and expert opinion believe that for autonomous AI, the responsibility in the cases of an inaccurate diagnosis falls upon the device manufacturer that created the algorithm. In cases where a physician doesn’t follow the AI’s recommendations that are standard-of-care, or when an AI recommends something that isn’t standard-of-care and the physician doesn’t follow it …. 8,9 For medical staff with less legal responsibility, it’s more likely that the AI device manufacturer will assume liability. Another issue is how liability will be assigned when a patient has another serious pathology that appears on the fundus photographs such as a retinal detachment or a life-threatening choroidal melanoma. If an AI model doesn’t alert the patient about this pathology, then there would be serious harm done to the patient. In contrast, human tele-retinal graders would be able to identify these and report the results directly to the patient. However, these AI models aren’t trained to detect other ocular pathologies.

Reimbursement structure

For AI DR screening to have the widespread effect on the population that many hope it will, it must be financially viable for clinics to adopt.

In the fee-for-service model, providers make insurance claims each time they provide the service of AI DR screening to a patient. In 2023, the base, non-location dependent reimbursement for CPT code 92229 (Remote imaging – automated analysis) was $40.28, compared to CPT code 92227 (Remote imaging – staff review) at $17.35 and CPT code 92228 (Remote imaging – MD interpretation) at $29.14.10

AI currently reimburses more than human graders, and this is likely required to encourage adoption of this technology and allow for increased access of care for diabetic retinopathy screening.11 Commercial insurance reimbursement rates are more difficult to assess because they are highly variable and not often publicly shared. However, one source found that the median privately negotiated rate for AI screening from Anthem Healthcare in California and New York was $127.81 in 2021.4

Another way that AI screening may provide revenue is through the value-based care reimbursement model. In this payment system, the clinic/group/provider is paid for the overall health of the population that it serves and the groups are incentivized to take the best care of the population based on process-based metrics. In the United States, the Merit-based Incentive Payment System offers payments for groups or providers that meet certain quality metrics. For diabetic eye screening, which is classified under MIPS measure 117, the percentage of the patients with diabetes cared for who receive regular diabetic eye screening is recorded. If the measure is met, then Medicare will offer financial incentives to the groups/providers.12 Therefore, groups/providers that aren’t in the fee-for-service payment model can still receive reimbursement for providing AI diabetic eye screening.

Equity and bias

As AI screening becomes more common in clinical practice, it’s essential to ensure that it performs equitably across diverse racial, socio-economic, geographical, gender and other populations. Although these models may perform well in one population, it doesn’t mean that they’ll perform well in a different one.

EyeART and IDx-DR haven’t shown any substantial drop in performance across different subgroups; however, there are limited studies analyzing these algorithms’ performance head-to-head.13–16 AEYE hasn’t published their results in any peer-reviewed scientific journal. While these algorithms have performed well so far, more studies are required to evaluate the performance across different diverse datasets to ensure equitable performance.

In conclusion, AI for DR screening is poised to make a significant impact on patient care over the next few years. However, there are still major questions regarding algorithm choice, regulation and reimbursement structures. As this new technology continues to be adopted, we hope that it’s used to deliver care to those with limited access to help prevent the progression of diabetic retinopathy. RS

REFERENCES

1. LumineticsCoreTM. Digital Diagnostics. Published March 28, 2023. https://www.digitaldiagnostics.com/products/eye-disease/lumineticscore/. Accessed June 24, 2024

2. EyeArt - Eyenuk, Inc. ~ Artificial Intelligence Eye Screening. Eyenuk, Inc. ~ Artificial Intelligence Eye Screening. Published April 14, 2020. https://www.eyenuk.com/us-en/products/eyeart/. Accessed June 24, 2024

3. AEYE Diagnostic Screening. AEYE Health. https://www.aeyehealth.com/aeye-diagnostic-screening. Accessed June 30, 2024

4. Wu Kevin, Wu Eric, Theodorou Brandon, Liang Weixin, Mack Christina, Glass Lucas, et al. Characterizing the Clinical Adoption of Medical AI Devices through U.S. Insurance Claims. NEJM AI. 2023;1(1):AIoa2300030.

5. K221183.pdf. https://www.accessdata.fda.gov/cdrh_docs/pdf22/K221183.pdf

6. K223357.pdf. https://www.accessdata.fda.gov/cdrh_docs/pdf22/K223357.pdf

7. ai-2018-board-policy-summary.pdf. https://www.ama-assn.org/system/files/2019-08/ai-2018-board-policy-summary.pdf

8. Saenz AD, Harned Z, Banerjee O, Abràmoff MD, Rajpurkar P. Autonomous AI systems in the face of liability, regulations and costs. NPJ Digit Med. 2023;6(1):185.

9. Mello MM, Guha N. Understanding Liability Risk from Using Health Care Artificial Intelligence Tools. N Engl J Med. 2024;390(3):271-278.

10. Billing and Coding: Remote Imaging of the Retina to Screen for Retinal Diseases. https://www.cms.gov/medicare-coverage-database/view/article.aspx?articleid=58914. Accessed April 5, 2023

11. AAO to CMS 2024 MPFS PR Comment 09.08.23 1 (1).pdf.

12. Abramoff Michael D., Dai Tinglong, Zou James. Scaling Adoption of Medical AI — Reimbursement from Value-Based Care and Fee-for-Service Perspectives. NEJM AI. 2024;1(5):AIpc2400083.

13. Tufail A, Rudisill C, Egan C, Kapetanakis VV, Salas-Vega S, Owen CG, et al. Automated Diabetic Retinopathy Image Assessment Software: Diagnostic Accuracy and Cost-Effectiveness Compared with Human Graders. Ophthalmology. 2017;124(3):343-351.

14. Ipp E, Liljenquist D, Bode B, Shah VN, Silverstein S, Regillo CD, et al. Pivotal Evaluation of an Artificial Intelligence System for Autonomous Detection of Referrable and Vision-Threatening Diabetic Retinopathy. JAMA Netw Open. 2021;4(11):e2134254.

15. Lee AY, Yanagihara RT, Lee CS, Blazes M, Jung HC, Chee YE, et al. Multicenter, Head-to-Head, Real-World Validation Study of Seven Automated Artificial Intelligence Diabetic Retinopathy Screening Systems. Diabetes Care. 2021;44(5):1168-1175.

16. Rajesh AE, Davidson OQ, Lee CS, Lee AY. Artificial Intelligence and Diabetic Retinopathy: AI Framework, Prospective Studies, Head-to-head Validation, and Cost-effectiveness. Diabetes Care. 2023;46(10):1728-1739.